Is the Internet of "Intelligent" Things (IOIT) becoming a whole new ballgame?

The internet of things is way ahead and gaining worldwide attention due to incredible advancements in it as time passes. The data is becoming more than the size of a Hulk due to the numerous connected data endpoints which give the most unparalleled insights. So, the question arises how we can tap the most of this generated data from all possible data streams? The answer is Artificial Intelligence – the new concept is the internet of intelligent things.

So, what are intelligent things?

We all know about the internet of things technology as it has become well known. IoT mentions the billions of Internet-connected devices all over the world. But, the whopping numbers of data that is continuously streaming through these devices is a considerable task to handle. So, here is where the internet of intelligent things saves our burden.

Earlier, experts have said that there should be more work on the utilisation of the internet of things where artificial intelligence would help in building better solutions. So, in recent years it has been pretty clear that IoT networks become more intelligent when combined with other autonomous technologies like machine learning and artificial intelligence. AI makes higher use of the extensive connectivity and more significant data streams which IoT devices can handle well.

What are the benefits that the AI provides?

Different solutions exist which can evaluate and understand IoT data, but machine learning and artificial intelligence can draw more detailed insights from this data and plan rapidly. Artificial intelligence can spot unusual patterns with higher accuracy with computer vision and audio recognition, thus, removing the need for a human reviewing system. Almost every business is aware of the benefits of IoT, but the enormous advantages of intelligent things are emerging right now. Enterprises can operate in unparalleled ways with intelligent things, consider three examples below –

Quicker responses

It is evident that the internet of things can help businesses in monitoring the operations at a detailed level and having deep insights into the daily activities of differently-sized companies. Nevertheless, there cannot always be a rapid reaction to this enormous data. With the help of artificial intelligence, businesses can be quick in reacting to the situations because AI crunches data at a faster rate and deeper perception.

Consider the example of Fujitsu has shown the use of intelligent things by getting alerts when the workers’ productivity drops, the reason being the heat stress.

Many banks can evaluate AI-enabled real-time data of usage of ATMs, with the analysis of automated CCTV footage. This analysis can result in tracking the fraud transactions and fraudsters.

Preventing failures and doing early maintenance

Industries can easily and quickly respond if any equipment fails. They don’t have to rely upon any human inspector or supervisor to notify them of any mishap, or any failure in a piece of equipment. All these happenings are reported promptly and the site gets an instant response. In fact, the AI-powered IoT technology can prevent the mishaps or failures from happening.

Consider the example of an Australian utility organisation. It is harnessing the power of prediction-based analytics which can use IoT data streams for predicting which reasons can cause a failure in an equipment/device. This helps in reducing the costs of heavy repairs by taking preventive actions early.

Upgraded level of efficiency

The vast number of data streams that IoT endpoints provide allows businesses to measure the performance of every component in depth. But, if someone needs to tap knowledge from all these streams at a faster rate, then intelligent computing is the answer.

Now, here consider Rolls Royce for example. It is using AI-powered computing for analysing the data coming from aircraft engines. With this data, Rolls Royce can make modifications in design and operations to reduce fuel consumption and get powerful performance.

Utilising the internet of intelligent things

It is crystal clear that IoT endpoints can give real-time data groups that bring superb advantages for businesses in a wide array of sectors. But, leveraging this data to acquire benefits is the real challenging task.

Though the impact of AI on our day-to-day lives is astonishing, we are still to witness the real combination of the internet of intelligent things and artificial intelligence, yet. It can prove as a real game-changer for almost every industry. To tap the real potential of IoIT (internet of intelligent things), you should have someone knowledgeable enough to guide you on harnessing the power of connected devices in full throttle.

The Contribution of IoT in Automation Industry

Growing technologies and expediting innovations are constantly changing the makeover of the world in some form or the other. One such technology is IoT (Internet of Things). This blog/article discusses the growth of IoT in the automation industry.

It is quite obvious that IoT is responsible for bigger changes in the life which we hardly imagined. It also plays a key role in the automation industry as it helps in creating and streamlining productive, responsive, and affordable system architecture.

IoT

Simply putting the definition, IoT connects different devices via the internet and therefore we can share data which automates the business processes comprehensively. Automation industries especially the distribution and manufacturing industries have to use a huge amount of sensors into devices, so connecting them with the internet helps in reducing the production cost, time, efforts, and enhancing lean manufacturing. IoT framework lets the conversation happen directly between interfaces of machine-to-machine which eases the process of data transfer. Quality checking has become easier than before because equipment and processors can monitor faults in the devices.

IoT is the Key Player of Smart Device Manufacturers

The supreme factors in the IoT industry are actuators and sensors. These work on the measurement of physical variables which are following the commands from magnetic, thermal, or physical detectors and these are the major components. The impact of IoT rose because of its ability to use devices to their fullest capacity at an exceptional level. The goal of leveraging IoT is to make devices communicate effortlessly and without friction. Some examples can be smart lights, smoke detectors, and thermostats.

This is the era of smart manufacturing. Standard optimization and seamless harnessing of data are essential things to manufacture smart devices. The business practices are becoming error-proof and yielding maximum results due to the IoT revolution. Since IoT got applied in manufacturing industries, it became IIOT meaning, Industrial Internet of Things or Industry. As per a report of Business Insider, when 2020 ends, you will find that the number of IoT devices got humongous increase up to 923 million! This figure was 237 million in 2015. Many big players are in the race of manufacturing smart devices with IoT.

The manufacturers have a lot of benefit from IIoT even they aren’t aware of. Those benefits are –

- Handling any factory is not easy and several factors are essential for smooth functioning. The journey from the precise functioning of machines to completing the goal of quality standards, everything needs to be analyzed. With the help of IoT, the makers can process the sensor signals after capturing more effectively. If any fault appears in the machine, then this can be easily predicted without hiring any special team to check for machine impairment.

- Business runs on assets, whether they be tangible, intangible, or current, they are most essential. Real-time tracking of assets is a big issue as enterprises work from remote locations, therefore, several factors affect the connectivity. Due to IoT, it becomes easy to track devices, stocks, and many other resources. The IoT powered solutions like ERP system is able to remotely trace the devices and machines. This feature helps in reducing costs and risks and also creating lots of revenue channels.

- Any business can run great if their product quality is superior and hence owners focus on the same. A high quality of products results in high sales, less wastage, and an increase in the productivity of employees. But, you cannot achieve this if your manufacturing machines are faulty. IoT is here to help, you can calibrate and sustain your machines through IoT and ensure that your production and product quality doesn’t go on the degradation road. Any fault or change in the device, equipment, or machinery can be sensed and alerts can be sent by which you can take immediate and appropriate actions.

- Extremely low downtime is what every business owner desires and that you can achieve with IoT. As mentioned above, the capability of IoT to detect the issues through sensors can help in resolving them. Internet-connected devices can improve the performance and reduce the losses caused by downtime.

How Can You Stay at the Top of the Market Graph?

Every company in the manufacturing arena wants to stay ahead of the competitor in the market along with retaining the old customer base. Though, the technology is evolving and so the demands of the customers are also changing. This change can reflect on the production process flow and the complete business. The organizations can stay ahead than the rivals when they adopt IoT in their manufacturing techniques and products. The organizations can strategize better with effective and enhanced strength. If you stay at the top, you can market your products in more quantity and in a faster way.

The Combination of IoT and ERP

Yes, that’s an important thing you can do to ease out your workload on the next level. If you get confused on while maintaining the stocks, purchases, sales or if you worry about keeping up with the technology, then you should choose an ERP software for automating the industrial process. ERP software integrated with the IoT devices can make your life easier.

Concluding, IoT enables businesses to gain more visibility, collaboration, ease of work, and streamlines it; thus helping the business to grow.

Debunking the Top 4 Myths about Public Cloud Security

Lots of businesses are moving towards cloud but still, they are pretty confused on which cloud to choose. Where most of the small and medium-sized businesses prefer public clouds but, they still have a fear in mind about the privacy, security, and costs as it is a public cloud. A lot of confusion still lingers around about the data security when people choose cloud computing. Public clouds are the recommended solutions to the businesses for cutting down the IT costs and improve the scalability and flexibility.

Security and control are two different and this difference is seen between the data security of cloud computing and data center. A company gets several benefits from cloud computing. Due to the public cloud, the companies can have quick provisioning, deployment, and IT resource scaling at much lower costs. A user can enter new markets easily and lessen the development time and wastage.

Actually, a public cloud can serve similar or better purposes than that of the traditional platforms like on premise. Even though there are several benefits, some myths still exist. We are here to debunk some of the myths about the public cloud so that the enterprises won’t get confused.

So, here are the top four myths about the security of the public cloud:

- You can’t control your data location/residency

- Customers on the same server are a threat to each other

- There is a lack of inherent transparency in the public cloud

- CSP (Cloud Service Provider) is only responsible for the security

You can’t control your data location/residency

Data residency/location is one of the prime concerns and therefore several countries have various laws which consider exporting of personal data in other countries as a criminal offense. Data residency is more of a concern when handling the personality identification data like financial information of any kind or health-related private information. In these cases, the cloud service provider should choose the locations from which it runs its data centers. The resellers that need to provide cloud services to their customers shall at least choose the service providers that can handle the location wise needs. So, it clarifies that this issue is not a matter to stress on. You can choose a quality cloud-service provider that can provide data residency as per your choice with accountability of data.

Customers on the same server are a threat to each other

This one is a constant myth about the multi-tenant cloud infrastructure that it is more vulnerable to attacks than that of the traditional IT infrastructure. Basically, in a public cloud, the tenants share all kinds of resources like storage, compute, and network. The sharing of all these physical resources arises the security concerns in the minds of the cloud tenants. They think that they are more vulnerable to attacks by the tenants of the same cloud. But, in actuality, it is very difficult for any tenant to attack the other tenant in the in the public cloud environment. The layer of hypervisor is primarily responsible for the separation between every tenant. If you don’t know then understand that hypervisors are very secure and therefore they are critical to attack. In addition, there are some cloud providers which provides more options to diminish the multi-tenancy risks at a greater extent. If you want to subscribe to a cloud service provider and get their offerings, then you should fully understand your requirements.

There is a lack of inherent transparency in the public cloud

Lack of transparency is liked by no one in any business as customers seek transparency everywhere. If there is visibility in any business, then it gets easier for the customers to trust you. Mistrust is the main reason that consumers back out from the cloud services because to build trust you should provide transparency and security. We can evaluate the cloud service provider by checking whether it has certain security compliances certifications or not. Further, you can validate if the service provider abides by the Could Trust Protocol or not. Through this protocol, the customers get the right information and it mentions that the data on the cloud is as it is and as per the rules mentioned. This protocol helps the customers by seeing the original information.

The companies can make correct choices about the data and processes. Which kind of data should go on which cloud and how to sustain the risk management decisions regarding the cloud services are the points which the company can work on confidently. Therefore, the visibility improves and the transparency gets affordable. Even though not every cloud provider will emphasize this and spend bucks over the maintaining 100% transparency, so every user can’t strictly demand this feature. Though, there will always be some kind of transparency maintained.

CSP (Cloud Service Provider) is only responsible for the security

Public cloud has an upper hand because the organizations can afford the resources like compute, space, RAM and several other features. Everyone can’t afford a personal server and hence the public cloud comes to the rescue.

The point is very easy, you don’t have to create everything from scratch as someone has already built it for you. It is not necessary for you to buy an individual server, or build a data center for that matter; unless that’s the only thing you have planned for your IT infrastructure.

No matter what, it is still your data and applications and therefore, you are responsible for it. It is your duty to select a perfect cloud vendor that caters your needs and seriously takes care of the security, disaster prevention, and post-disaster recovery. You should not just take a casual or mild approach while choosing the service provider and then the package which the provider offers. Things won’t work like that. Even if the vendor knows how to take care of the security part, you should also be knowledgeable enough to understand the risks, and make decisions.

Conclusion

Anyhow, the fact is that the public cloud provides more security than a conventional data center. Nowadays, cloud service providers are providing various levels of security by having some great tools and scanners. All of this is because the increasing number of threats and therefore growing cyber threats have forced them to become more attentive for preventing the attacks.

Importance of SOC (Security Operations Center) for Small and Medium-Sized Businesses

With an increasing number of threats in the world, small and mid-sized businesses are facing numerous issues. They are keen to find security services which fit their budgets and yet provide proper security services. An important problem that SMBs (small and mid-sized businesses) face is lack of personnel to build and function their own SOC (Security Operation Center). Due to this, the Security Information and Event Management (SIEM) process is out of reach. Eventually, many such organizations are turning towards the way of outsourcing SOC as a Service which can suit their organization's needs and improve the security posture. Several small to mid-sized companies face the "trio of the cyber security troubles" as follows:

- Recent ransomware like Petya and WannaCry caught the world in their evil grip but in a more modern way.

- With the increasing number of cyber threats, there is an increase in the security expertise scarcity creating over 3.5 million cyber security openings by 2021.

- As per the Verizon’s DBIR report, hackers are targeting on small and mid-sized businesses and creating a havoc in them as they lack proper SOC (Security Operations Center) services.

As a consequence, small and medium-sized businesses (SMBs) are finding ways on how they can deal with so many upcoming challenges. Therefore, they are going to the reputed security service providers who can implement SOC as a Service. Although, this is a right decision, yet exploring and choosing the correct SOC service provider is not that easy. If your vendor lacks proper and mandatory amenities for the effective SOC with a plain focus on managed detection, then this can turn to a bigger loophole in your security posture.

If you too are stuck on how to choose a smart security provider, then you can follow the below checklist. It guides you to search for a comprehensive SOC service. The checklist includes:

Complexity level

A recent Gartner study identified that MDR (managed detection and response) is a fast-growing market. The detection is obviously used to recognize the threats, but the SOC should also provide prevention and IR (incident response) in case of a disaster.

A comprehensive security package like decisive and effective IR, protection from DDoS attack, ransomware, data breach, and disaster recovery is all you need when you consider a SOC. If the vendor doesn't provide 24/7 SOC and IR services, then it should not be termed as SOC

.

Real-Time Threat Analysis

Monitoring the threats in real-time with the use of detection services and forensics is a crucial task for SOC. It should be for all the security incidents on the basis of 24/7. The scanty staff in the security team can't handle the noisy and complex SIEM (Security Information and Event Management) tools. They can't strain out the false alarms and hence the performance level doesn't stay up to the mark for vital security matters.

You have to make sure that the SOC provider has the abilities of smart detection of the threats round the clock so that you can sleep peacefully.

Armed Threat Hunting

With the burgeoning techniques of hacking and hackers getting smart, it is very tedious to detect every single type of attack. Staying armed means, the network has to stay prepared in advance and search for the threats proactively. This would result in auto-adjustment of the network as per the latest cyber-attacks which could be just a few hours ago. This is a huge responsibility of the security specialists. It calls for learning the different and unique requirements of the client's network and hunt down the threats which can still pass on through the detection process. For this method to work, we need relevant and efficient threat-intelligent sources, machine learning techniques, and choosing everything which can help in one or the other way to find valid security incidents impacting the consumers.

Compliance Control

Compliances are a vital factor while implementing the SOC. Every SOC should compulsorily have some compliances like PCI DSS, HITECH, HIPAA, GLBA, FFIEC, and some other standards that high-quality industries must bind to. The compliance organizations must provide templates for recommended security checks and vulnerability assessments and see whether the businesses are abiding by the given regulatory measures.

Not just hackers can cost you big bucks, but not having required compliances can lead you to pay penalties as well! You must make sure that all these things are handled by your SOC service provider.

Strategic Advising

After monitoring the network and hunting for the upcoming threats, the security engineers will get an in-depth understanding of your company's network. This knowledge of network topology, places of the vital assets will help them to protect those with a proper defense strategy. You should demand this from the outsourced SOC provider as this contributes to designing and improving the security posture.

Instead of having a just scalable cloud-based technology, an outlined IR (Incident Response) process and a team of well-trained security specialists shall persuade the clients to get insights into their organization's security posture. Further, this helps in improving and running the business processes more effectively.

Defined Pricing

Pricing is the issue which everyone faces. Make sure that your prices don't fluctuate every single time because this would deteriorate the trust of your consumers. The SOC service provider should make fixed pricing plans. The rates shall vary on the number of sensors and users instead of log data's volume and servers monitored. Such predictable and defined pricing models are essential for small and mid-sized businesses (SMBs). These organizations struggle with the fluctuating costs and can't afford highly expensive managed services. Therefore, the SOC providers should not have unpredictable costs.

To summarize

All these factors are important to consider while choosing the SOC provider. This checklist will guide you to know which things you should not compromise when you want to outsource the SOC provider. You can further read why SOC is important here.

Know About SOC (Security Operations Center) and the Rise of SIS (Security Insight Services)

What is SOC?

SOC i.e., Security Operations Center is that army which protects you from the terrorists named as cyber-attacks and online threats. Having said that, it resembles the 24/7 hardworking forces dedicated to preventing, detecting, assessing, and responding to the cyber threats and vulnerabilities. The team is highly skilled and organized with the mission of continuously monitoring and improving the security posture of an organization.

The Strategy of SOC

The SOC strategy has to be business-specific and clearly outlined. It strictly depends upon the support and sponsorship of executive levels otherwise it’s not possible for SOC to work properly. The SOC must be an asset to the rest of the organization. The aim of SOC should be catering to the company’s needs and a strong sponsorship from the executives is mandatory to make it successful.

The Infrastructure

Careful planning is the key to make any model successful. Same is the case with the SOC environment design. The aspects like physical security, layout, and electrical arrangements for the equipment, lighting, and acoustics must be considered properly. The SOC needs to have specific areas like a war room, an operational room, and the offices for supervisors. There must be proper visibility, comfort, control, and efficiency in every single area and therefore the design should be in consideration with these aspects.

The Technological Environment

After the mission and scope of the SOC, designing the underlying infrastructure is important. As several components are mandatory to build a comprehensive technological environment like firewalls, breach detection solutions, IPSs/IDSs, probes, and SIEM of course, to name a few. Efficient and effective data collection is primarily essential for a perfect SOC. Packet captures, telemetry, data flows, Syslog, and many such events are vital to collect, correlate, and analyze from the perspective of security. It is also essential to monitor the information and data about the vulnerabilities which can affect the complete ecosystem.

The Team and Processes

Although, technical aspects are highly important, still the huge and high-tech control room would be worthless if it doesn’t have people and proper functions/processes.

Just like a fully equipped car is useless without a driver, an organization is empty without human resources and policies. Technology, processes, and people are the pillars of SOC.

As we know, SOC is a Team and every winning team shall follow some rules. Apart from engineers, analysts, and dev-ops people, there will be leaders and the leadership skills are necessary for everyone. There will be several tiers assigned to different team members. The analysis based on the real event monitoring, security incident/data breach detection,

response to the incidents, and finally the remediation of those happenings. The paramount of the organization is coordination, collaboration, efficiency, and timing. Every member has to be aware of the strategy and mission of the SOC and hence, leadership plays a key role in this scenario. The SOC manager must be the one who inspires and motivates other team members so that they can contribute to the organization’s vision and mission. After all, providing 24/7 service while handling the stress isn’t easy at all.

Selecting such team members who can add value, is really a challenging task as the required skill-set is quite big and the enthusiasm should also be there. Again the exact amount of the workers must be hired, neither less nor more.

Considering this scenario, adopting a hybrid vision model could prove viable as it envisions the cooperation between the internal teams and managed service providers which are outsourced.

The Types of SOC models

Are you aware that there are several kinds of SOC models? Yes, check out below-

Virtual SOC

• It has no dedicated solution/facility

• Members are part-time

• The team is active only when critical incidents occur

Dedicated SOC

• Facility is dedicated

• The team is also dedicated

• Totally in-house team

Co-managed / Distributed SOC

• Both semi-dedicated and dedicated teams

• Usually, 5 X 8 operations are handled

• It becomes co-managed when paired with MSSP (Managed Security Service Provider)

Command SOC

• Coordination with other SOCs

• Offers situational awareness, threat intelligence, and additional expertise

• Not always directly involved in day-to-day operations but rarely

NOC (Network Operations Center) / Multifunction SOC

• Dedicated facility and team

• Performs all critical IT and security operations 24/7 with common facilities

• Helps in reducing the costs of the organization

Fusion SOC

One SOC facility consists of new and traditional SOC functions like CIRT (Computer Incident Response Team), threat intelligence, and OT (Operational Technology) functions which are combined.

Fully Outsourced SOC

Apart from the above six models, the service provider of ‘fully outsourced model’ operates and builds the SOC with minimum but supervisory involvement from the customer’s enterprise.

The Intelligence and Approach

To enhance the organization’s security posture, the SOC has to be both –active and proactive as it needs to carry out the process of Vulnerability Management. The priority for SOC is a robust approach to handling vulnerability and risk assessment skill. Other than that the OWASP model approach can be taken into the consideration too. Also, a threat intelligence approach (context aware) shall be implemented to become more effective in diagnosing/preventing the threats and adding more value.

The Essentials

Creating and Operating a SOC demands high quality, infrastructure, enthusiasm, teamwork, and skills. It should have best practices, compliances, and frameworks like COBIT, ITIL, and other are vital to abide by the PCI DSS and ISO/IEC 27001: 2013 standards.

ITIL is a potentially unmatched source of guidance in case of service design and strategy, service level management, and coordinating between the SOC related purposes and incident management processes.

Also, COBIT and especially its Maturity Model, COBIT- MM shall be considered as a premium guideline for checking how mature is SOC?

The performance of the SOC has to be measured correctly and appropriately in all aspects. Therefore, the KPIs must be well-defined to check the application of ITIL, i.e., continual improvement of service. These steps will help in generating the best results from the SOC and add value to the organization.

So, these were the things you need to know about SOC.

Now, let’s understand what are Managed Security Services or Security Insight Services.

SIS (Security Insight Services)

We all know the hell number of online threats and cyber-attacks going on in the world. These things happen due to lack of essential security tools, equipment, and services. Many of the businesses are so concerned about the security of their data and loss of business but they don’t get proper solutions. They are often worried about how prepared their organization is to handle the online crisis situations.

To these problems, ‘Security Insight Services’ is the solution. It is a one-stop-shop solution for all the current and possible online threats/attacks.

The offerings by SIS

• Project driven approach

• Security Incident & Threat Analysis

• Project Driven Approach

• Security posturing assessment

• Security Incident & Threat Analysis

• Gap Analysis

• Network Security Assessment

• Malware Threat Modeling

• Database Activity monitoring & Vulnerability Scanning

• SIEM effectiveness modeling Configuration Auditing

• Process Auditing

• Application Vulnerability Assessment Email System Assessment

• Wireless System Assessment

• DDOS Attack Preparedness Testing DLP Analysis

The Need for SOC and SIS

If you aren’t aware already then let me tell you that if an attack happens, it takes 99 days on an average for that to get identified. Now that’s a big amount of time! So, you get the need for data protection and privacy for providing security. Hence, it clearly indicates the dire need for newness in the technology of cyber-security. Many people forget that just having the correct tools and processes isn’t enough. You can be still vulnerable to threats and attacks if you don’t monitor systems, detect upcoming threats, and don’t make any changes in the systems/operations whenever an attack or threat is identified.

Many organizations are now getting aware and want to build their SOC as they want more control over the safety of their data, monitoring, and the response. A SOC built project creates a strategic business impact and hence it’s a critical and vital initiative for those organizations.

Conclusion

Looking at all the above key pointers, we get to know about the ideal SOC, the necessities for it in all aspects, the rise of SIS (Security Insight Services), and the vitality of SOC and SIS. To run ta SOC, the comprehensive range of cyber security aspects, high skills, and important competencies have to be considered. Building SOC is a combination of business strategies and high level of security armors as a service.

Teamwork, great leadership skill, and motivation are vital for every member of the team, especially for the manager. A fully functional SOC is a complex project because it has to deal with wide and endless range or problems related to the data security. As the time gets ahead, there are going to be more challenges, and therefore a SOC has to be prepared for the same.

There is going to be the constant need for high-end online security services, and everyone has to brace for it! SOC team has a lot of work to do and that too tirelessly.

Many businesses will have to choose one of the best online security services or the SOCs, and we are certainly going to get a number of them in the near future.

So, the whole point is that every single business should find a great SOC to cater to their needs of business security and improve the complete security structure of the organization.

Cloud Metering and Billing

Background

Cloud computing is not an option anymore, rather it is the standard for businesses to run their applications. Cloud computing helps orchestrates IT infrastructure and provide IT services as a commodity on a services based model. For businesses either renting these services or owning them privately, it is implied that the Cloud Services Provider provide insights with respect to resource utilization and metering for capital management and auditing.

Each cloud services provider has its own way of deploying resources and metering them, and that differs from the traditional IT business model, from procuring resources to providing them for deploying services. Improved IT infrastructure management, granularity in resources metering and ability to determine expenditure per service, changes the capital expenditure model to an operational expenditure model.

CIOs who know where their money comes from are in better control of their finances. Charge back or show back can help to engage the business in IT spending and value, but the effort must be worthwhile. Some CIOs simply want to stop the business consuming more and more IT while blaming them for the cost and asking them to make it cost less.

Well-implemented charge back can make the relationship between spending and revenue more transparent and intuitive. This reduces the need for expensive governance committee meetings and management interventions, freeing the organization to focus on optimizing all business spending.

Charge back is often a source of contention between IT executives and business leaders, but it need not be. CIOs can use charge back to transform their team's relationships with business stakeholders, improve financial transparency, and gain additional funding.

As a cost-center, IT budget always comes from charge back against the organization's business revenue, even in cases where the IT organization does not directly charge back for IT services.

Organizations that lack financial transparency in their services delivery are vulnerable to time consuming audits and unbudgeted tax invoices.

Public cloud service providers, handle the overhead of managing IT hardware infrastructure while organizations can focus on their core business functionality. Private cloud owned by organizations, the entire stack is managed by the owner or outsourced to third party service integrators. In both cases regular insights on resources metering with respect to cost is required for planning and correct strategic decisions.

Efficient IT infrastructure management is incomplete without aligning IT resources with cost. It is also essential to map the consumption of these resources per user in order to determine efficiency and profitability. Gathering data and generating insights is necessary for continuous improvement and getting maximum returns on investments.

Multi-Billing in eNlight 360

eNlight 360 come out of the box with cloud metering and billing. Being a leader in Cloud orchestration software, eNlight 360 provides IT infrastructure management, enables application deployment on virtualized resources, multi-tenant operations and Multi-Billing models.

At the base level eNlight 360 provides virtual machine resources metering. Real time processor, memory, disk and bandwidth utilization is provided for static as well as dynamically auto-scalable virtual machines. These resources can be directly mapped with per unit utilization and that provides statistics with respect to monetary utilization of resources.

eNlight 360’s Multi-Billing module combined with multi-tenant architecture, enables businesses gather monetary resource consumption statistics at a business unit, department and individual user level. eNlight 360 provides multiple billing models, which suite almost all business models, they are –

1. Dynamic Pay-Per-Consume

2. Fixed Pay-Per-Use

3. Service Based Billing

Dynamic Pay-Per-Consume Billing

Charging resources based upon consumption against allocation is Dynamic Pay-Per-Consume Billing. Dynamic Pay-Per-Consume billing leverages eNlight 360’s Auto scaling technology to provide chargeback mechanism for IT resources based upon consumption rather than allocated resources.

eNlight 360 enables users to deploy auto-scalable virtual machines that scale dynamically as per resource requirement. Compute resources are allocated and deallocated from the virtual machine in real-time. Due to auto scaling virtual machines can run at bare minimum resources and can demand resources as and when required. For example, a virtual machine can run with minimum 2 vCPU and 2GB RAM at 02.00 am and can demand 4 to 6 vCPU and 12 to 16GB RAM in the peak time at 12.00 pm. This leads to dynamic resources utilization, having wavy resource utilization graphs.

eNlight 360 allows billing of such dynamic resources at the granularity of minutes. Dynamic virtual machines are provisioned with min / max resource capping. These virtual machines scale between the min / max resource caps. At any point the virtual machine would be consuming resources in between that resource capping. In this case dynamic Pay-Per-Consume billing allows dynamic resource metering and charge these consumed resources based upon the per unit rates defined in eNlight 360’s charge back system.

eNlight 360’s Auto scaling enables to achieve greater server consolidation ratio while Dynamic Pay-Per-Consume Billing enables cloud resource metering based upon consumption for these auto scalable virtual machines.

Fixed Pay-Per-Use Billing

Charging resources based upon allocation is Pay-Per-Use Billing. Essentially it is direct billing based upon the units allocated from the pool of cloud resources. As opposed to Dynamic Pay-Per-Consume billing, Fixed Pay-Per-Use billing charges resources based upon their allocation. This is the conventional billing model that the entire cloud market implements.

In eNlight 360, a virtual machine with fixed resources can be provisioned which are known as Static Virtual Machine. For example, a VM with 8 vCPU and 12 GB RAM. The resource consumption of static virtual machine is equals to the allocated resources. This leads to fixed resource utilization, where the resources can be charged on fixed flat rates.

Services Based Billing

Charging tenants based upon service deployment is Service Based Billing.

In eNlight 360, a service can be deployed in the form of group of related resources. For example, Mail service which consists of email server and backup servers. And this group of resources can be charged flat based upon the charges and policies defined in eNlight 360’s Billing system.

Service Based Billing is different from Pay-Per-Consume and Pay-Per-Use in a sense that it enables to set flat rates and charge group of services based upon these rates defined in the system. This flat chargeback model allows to group application deployments and resources under one common financial entity and simplify billing of related resources.

Conclusion

Cloud is de facto approach to deploy services and manage IT infrastructure, and having a clear view of resource metering from a financial perspective is critical. eNlight 360 provides multiple options a chargeback models that suits almost all business requirements. Service Based Billing enables charging group of resources with fixed flat rates across different services deployed across departments or business units.

eNlight 360 provides highly granular resource utilization metering which can be charged using Pay-Per-Consume and Pay-Per-Use billing models. Pay-Per-Use billing provides more control and better granularity in terms of charging IT resources. These resources are charged against static utilization while different rates per unit can be configured in the system. Dynamic Pay-Per-Consume billing model is exclusive to eNlight 360 which leverages the eNlight 360’s patented Auto scaling technology to provide chargeback mechanism for dynamically scaled resources in real time.

With eNlight 360’s, Multi-Billing combined with Multi-Tenant architecture CxO’s can experience the next generation IT resource management from a single cloud management portal.

About US:

With eNlight 360’s, Multi-Billing combined with Multi-Tenant architecture CxO’s can experience the next generation IT resource management from a single cloud management portal. For more information, visit us at: cloud services India AND eNlight 360

ESDS is proud to be Indian government’s digital partner in empowering MSMEs

With a view to enlarge its foot print in delivery of products and services in MSME eco-system, a series of digital initiatives involving various portals such as sidbi.in, startupmitra.in, cgtmse.in, mudra.org.in and udyamimitra.in has been launched by SIDBI in the recent past. ESDS is proud to be associated with these initiatives…

SIDBI

Small Industries Development Bank of India (SIDBI) set up on 2nd April 1990 under an Act of Indian Parliament, acts as the Principal Financial Institution for Promotion, Financing and Development of the Micro, Small and Medium Enterprise (MSME) sector as well as for co-ordination of functions of institutions engaged in similar activities.

Stand-Up India

Stand-Up India scheme aims at promoting entrepreneurship among women and scheduled castes and tribes. The scheme is anchored by Department of Financial Services (DFS), Ministry of Finance, Government of India. It facilitates bank loans between Rs 10 lakh and Rs 1 Crore to at least one Scheduled Caste (SC) or Scheduled Tribe (ST) borrower and at least one-woman borrower per bank branch for setting up a greenfield enterprise.

Mudra Loans

Pradhan Mantri MUDRA Yojana (PMMY) is a scheme for providing loans up to 10 lakh to non-corporate, non-farm small/micro enterprises. These loans are classified as MUDRA loans under PMMY. These loans are given by Commercial Banks, RRBs, Small Finance Banks, Cooperative Banks, MFIs and NBFCs. The borrower can approach any of the lending institutions mentioned above or can apply online through this portal.

CGTMSE

Availability of bank credit without the hassles of collaterals/third party guarantees is a major source of support to the first generation entrepreneurs to realize their dream of setting up a unit of their own Micro and Small Enterprise. Keeping this objective in view, MSME ministry launched Credit Guarantee Scheme to strengthen credit delivery system and facilitate flow of credit to MSE sector. To operationalize the scheme, Government of India and SIDBI set up the Credit Guarantee Fund Trust for Micro and Small Enterprises (CGTMSE).

Udyami Mitra

SIDBI Udyami mitra is an enabling platform which leverages IT architecture of Stand-Up Mitra portal and aims at instilling ease of access to MSMEs financial and non-financial service needs. The portal, as a virtual market place, endeavors to provide ‘End to End’ solutions not only for credit delivery but also for the host of Credit-plus services by way of hand holding support, application tracking and multiple interface with stakeholders.

About US:

ESDS Core Banking software offers end to end core banking hosting services at lowest cost. For more information, visit us at: Core Banking Software Solutions

ESDS’s Disaster Recovery offering comes to DNDCCB’s rescue

Circular from RBI mandated all co-operative banks to implement CBS that would assist in providing round the clock processing for all the products, services, and information of a bank. DNDCCB opted for Data center setup in their own premises and ESDS was selected as end-to-end solution provider for setting up the Data center, redundant network connectivity to all branches and Disaster Recovery at ESDS Data center on Cloud Hosting model.

Incident

On Sunday, April 17, 2016, the officials at Dhule & Nandurbar District Central Co-operative Bank Limited were thrown into turmoil when they saw fumes coming out of the windows of their Head office. The fire started at around 8:30 am on the third and fourth floor of the bank where the corporate offices were located. DNDCCB’s on-site DC located in the same place hosted all the critical data and client information along with all the transactions and banking operation information. Losing this data could have resulted in some serious consequences. The fire was so intense that it took around 70 fire brigades and 5 hours to get the situation under control. The Bank lost all of its IT assets and important documents in the accident but thankfully the DC was on the second floor so it was unharmed. While there was no harm to the Servers and Storages in the DC, but the outdoor units of PAC’s and Electrical Systems had got damaged, so the DC became completely inaccessible.

Since the Business Continuity Plan was already in place by ESDS, all the data was safe and business operations could be resumed without any loss of data.

Execution Framework

ESDS’s dedicated monitoring team caught site of the scenario and immediately instructed the bank officials to shut down the DC operations on priority & have it sealed.

DC Security team contacts BCP Committee for DR Approval:

The incident took place around 8:30 am and the data was backed up at DR Site (ESDS Data center) till 8:28 am. As soon as the Security team informed ESDS about the Scenario, the 24/7 support team contacted DNDCCB BCP Committee. After investigating the complete situation of the fire disaster at DNDCCB, it was concluded that the bank head office has taken a major toll due to the catastrophe and it can take weeks, if not months for the bank to completely resume its standard operations. In the meantime, ESDS’s “Service Delivery” team took charge of the situation and made sure that DNDCCB DC infrastructure is secured by taking preventive measures on-site and took consent of the BCP committee to initiate DR process.

After DNDCCB’s BCP Committees consent, ESDS was able to immediately initiate the DR switch over within 2 hours of the disaster. Dhule BCP team was then updated about the DR activation and requested to verify data integrity and application accessibility at their end.

Service Delivery

After successful switch over to DR site at ESDS, the Service delivery team took a follow up for another 4 hours and made sure that all 90 branches of DNDCCB are connected to the DR site and the operations are running smoothly at all branches. “Complete Disaster Recovery” was achieved with near zero data loss.

to DR site at ESDS, the Service delivery team took a follow up for another 4 hours and made sure that all 90 branches of DNDCCB are connected to the DR site and the operations are running smoothly at all branches. “Complete Disaster Recovery” was achieved with near zero data loss.

Operations Recovered from DR Site:

The Dhule & Nandurbar DCCB was successful in transitioning all of its 90 branches to the DR site and was able to make sure the banks operations don’t get hampered. To the outside world, the bank was open for business as usual. The Bank has been able to curve paths towards achieving business benefits by offering state-of-the-art services to customers through their branches across Dhule & Nandurbar districts with no hindrances in its work processes.

Best DR practices every organization needs to consider before implementing a DR solution:

It is very important that the DR location ought to be no less than 150 km’s from the DC site and not so far that it hampers the required RPO & RTO. The required RPO can be determined by calculating the cost of downtime for the business and weighing it against the respective investments in DR infrastructure. It should be noted that while there are tangible costs that results from business interruption, there are also intangible costs that would surface in the form of lost opportunities for new business to the competitors, loss of reputation etc.

Importance of DR Drills:

DR Drills plays a vital role in the whole process but its importance is often ignored by many organizations. ESDS makes sure to perform at least two DR drills every year with DNDCCB team in order to test all the applications, creating solutions for ways to access the critical applications and programs in the event of a disaster. This is done in order to rectify any discrepancies found during the drill thereby eliminating them during the actual Disaster. This is one of the most crucial parts of the disaster recovery plan for any organization. In the event of disaster, where the operations from HO as in the case of DNCCB are down, RBI has very strict policies to adhere in CBS, as it can result in the voiding of SLA’s and cancellations of Licenses.

In this article, we have sketched out DNDCCB’s present business continuity arrangements, in terms of various requests from the financial institutes. It ought to likewise be noticed that, in spite of the fact that the Bank’s DR plan incorporate arrangements to address extremely severe conditions resulting due to a disaster, it is not necessary that all the financial institutions be prepared for such conditions in the same manner.

Significance of DR

The 17th April Fire Incident at DNDCCB head office have demonstrated the significance of business continuity planning/Disaster Recovery addressing wide-range interruptions and prompted numerous financial institutes to audit and fortify their own DR arrangements. To empower and help these moves by financial institutions, and along these lines guarantee the viable functioning of payment and settlement systems, security & stability of the financial market in India even in times of disasters.

“Disaster Recovery isn’t a one size fits all methodology. Every business is distinctive and every application, a group of clients and organization will have distinct necessities. Our real-time auto scaling Cloud platform is a perfect fit for all types of Organizations and all such DR setups are managed and monitored 24×7” says Piyush Somani, MD & CEO, ESDS Software Solutions Pvt. Ltd.

Disaster recovery and business Continuity planning are basic parts of the overall risk management for an organization. Since the majority of the threats can’t be dispensed, organizations are executing disaster recovery and business continuity plan to get ready for possibly unexpected disasters. Both procedures are similarly critical on the grounds that they give detail strategies on how the business will proceed after extreme interferences and catastrophes.

In the case of a disaster, the proceedings with operations of your organization rely upon the capacity to replicate your IT frameworks and information. The disaster recovery arrangement stipulates how an organization will plan for a disaster, how the organization will respond, and what steps it will take to guarantee that operations can be reestablished. Disaster recovery depicts the greater part of the strides required in making arrangements for and adjusting to a potential disaster with a guide that will reestablish operations while minimizing the long haul negative effect on the organization.

On the other hand, Business continuity plan recommends a more thorough way of ensuring your business is operational, after a characteristic calamity, as well as in the case of littler disruptions. Business continuity includes keeping all parts of a business functioning as opposed to simply technology systems. DR arrangement is a genuinely new approach that teaches what steps an organization must take to minimize the impacts of administration interference. This will restrict the transient negative effect on the organization.

Key Takeaways:

- DNDCCB was able to tackle a crucial disaster situation which could have hampered the entire operation of the bank.

- 100% uptime for Data Center, DR, and 99.95% connectivity uptime achieved for all branches

- The entire set-up deployed for DR is capable of recovering in case of disasters at a primary location within an hour (RTO = 1 hour).

- Secure Connectivity solution from 90 branches to the DC

- Prior DR Drills Assured Smooth & Secured move to the DR Site after the incident.

- Disaster Recovery monitoring with eMagic DCIM tool made it easy to address the calamity the

moment it happened and take appropriate actions.

- Short RPO helped in zero transaction loss.

- Bank was able to continue regular operations within an hour after the incidence.

The objective behind this article is to enlighten all the banks & financial institutions with the importance of DR/Business continuity plans. It is very important to have the right approach, well-defined DR plans and an experienced technology partner in place. DNDCCB was smart enough to do so, are you?

DNDCCB

Mr. Dhiraj Chaudhary

CEO, DNDCCB

Data Sensitivity is an integral part of any organization and DNDCCB being a co-operative bank had all their critical data hosted at Dhule Data Center. DNDCCB had a forecast and vision for the requirement of a DR solution. DNDCCB with the help of ESDS pro-actively Implemented DR Solution around 2 years back. DR site was up and fully functional with regular DR Drills & Testing performed by ESDS at least twice a year. DNDCCB was never prepared for any Disaster, but the mandate from RBI to have a fully functional Disaster Recovery site is what saved us from a major Fire hazard that completely destroyed our Head office and the Data Center at Dhule also got badly affected. The bank highly appreciates ESDS support with the successful & efficient transition of its 90 branches to the DR site and made sure the banks operations doesn’t get hampered due to the Fire scenario happened at its head office. The Bank has been able to curve paths towards achieving business benefits by offering state-of-the-art services to customers through their 40+ branches across Dhule and Nandurbar districts with no hindrances in its work processes. The bank was fully functional from the very next day without any data or transaction loss. Network service was scaled to other 50 branches within few days and all 90 branches started running from the Disaster Recovery Cloud hosting solution provided by ESDS. All our branches continue to function from the DR solution of ESDS from last 2 months.

Mr. Rajvardhan Kadambande

Hon. Chairman, DNDCCB

After the Fire incidence, ESDS’s DR Rescue team was available at the incident site within 2 hrs. The support team after analyzing the complete scenario and understanding the criticality of the situation worked 24/7 on the DR Activation process ensuring all the branches were fully operational the very next day of the incidence. I would like to extend my gratitude on behalf of the bank. We are really thankful for ESDS’s Exuberant support in such a crucial moment of time. Loss of any data would have created major problems for the DNDCCB Bank, but we were fortunate enough that ESDS restored entire banking operation from the DR site with 0 data loss.

About US:

ESDS restored entire banking operation from the DR site with 0 data loss. For more information, visit us at: Disaster Recovery AND Data Center

Here’s why 280+ Indian banks chose ESDS’ Banking Community Cloud services

Cloud has been playing an essential role in the digital transformation taking place in the banking sector right from the beginning. In fact, right from the time since banks have started adopting market changes and incorporating all new technological trend. It’s just a matter of some time when ESDS introduced the concept of Banking Community Cloud in the IT and BFSI segment. And this idea that is initiated by ESDS proved to be really useful one on the national level. It is world’s first BFSI Community Cloud that has already connected more than 280 co-operative Indian banks. ESDS’ Banking Community Cloud platform includes everything right from digital banking, IT infrastructure, hosted payment platform, AI chatbot, and its own security scanner called MTvScan. ESDS is currently connected to and is monitoring 2,000+ bank branches, more than 280 banks are hosted on eNlight Cloud Platform for DC & DR services, and also we are the 1st successfully implemented Indian ASP model.

What makes ESDS’ Banking Community Cloud the most preferred choice?

ESDS specializes in delivering turnkey DC services and business continuity with state-of-the-art facilities fully supported by its exuberant project engineers. We are single-point operators responsible for all data center services including consulting, implementation, RIM and monitoring. We design, supply, install and operate data centers in full capacity with the expertise to customize solutions as per business requirement. You can also reduce total cost of ownership with ESDS' turnkey data center solution on real-time basis. ESDS also has tie-ups with numerous CBS and ASP vendors present in the market. They trust us too since their applications work in a perfectly stable manner on our cloud environment. No ASP has ever faced any issues after they have been hosted with us. Also, there are small banks who cannot invest much on DC infrastructure and to boost the small banking sector ESDS provides them with flexible packages including affordable customized commercial payment models. ESDS’ exuberant support, 24x7 availability, delivery of services on time along with certifications like STQC, PCI-DSS and ISO have made us the preferred choice of banks.

ESDS’ USP:

We are so far the strongest data center services provider having a set of solutions for the banking sector. Our deep understanding of core banking solution coupled with complete banking services along with additional services like ATM, POS machines, cards, connectivity and others makes us stay ahead in the game. Our BFSI service portfolio includes services like Core Banking, IMPS, UPI, Cheque Truncation System (CTS), ATM Switching, Document Management System (DMS), Electronic Know Your Customer (eKYC), Central Know your Customer (CKYC), Anti Money Laundering (AML), Asset Liability Management (ALM) apart from applications in the insurance segment.

ESDS’ Banking Community Cloud is created especially for the cooperative banking sector that is compliant with all the RBI guidelines, IT Act, PCI DSS audit and all the rules set up by regulating authorities. Moreover, the cloud takes care of scalability, performance and fulfills all demands of the banking sector. Solutions like colocation, dedicated server hosting, private cloud hosting, public cloud hosting and others are customized according to every bank’s requirements. According to RBI rules all banks have to get the DR drills done. ESDS conducts these drills for respective banks irrespective of the fact that the DR is hosted with us or not and in spite of all the challenges faced like permissions, users required to perform testing and others.

What are the pros of getting hosted on eNlight Cloud?

• There is a flexible billing model of services, wherein billing starts only when the application in all the branches is LIVE

• The pay-per-consume billing model saves a lot of expenses as clients only have to pay for the resource allocated and consumed

• Customers are offered virtual infrastructure instead of dedicated servers

• You get an added benefit as the CAPEX to OPEX profit ratio is high

• Clients also get bundled DC-DR package

We at ESDS also expect to onboard 1000 more co-operative banks by 2020 and make the bank sector agile and good enough to compete against their competitors.

About US:

We at ESDS also expect to onboard 1000 more co-operative banks by 2020 and make the bank sector agile and good enough to compete against their competitors. For more information, visit us at: Core Banking in India AND digital banking

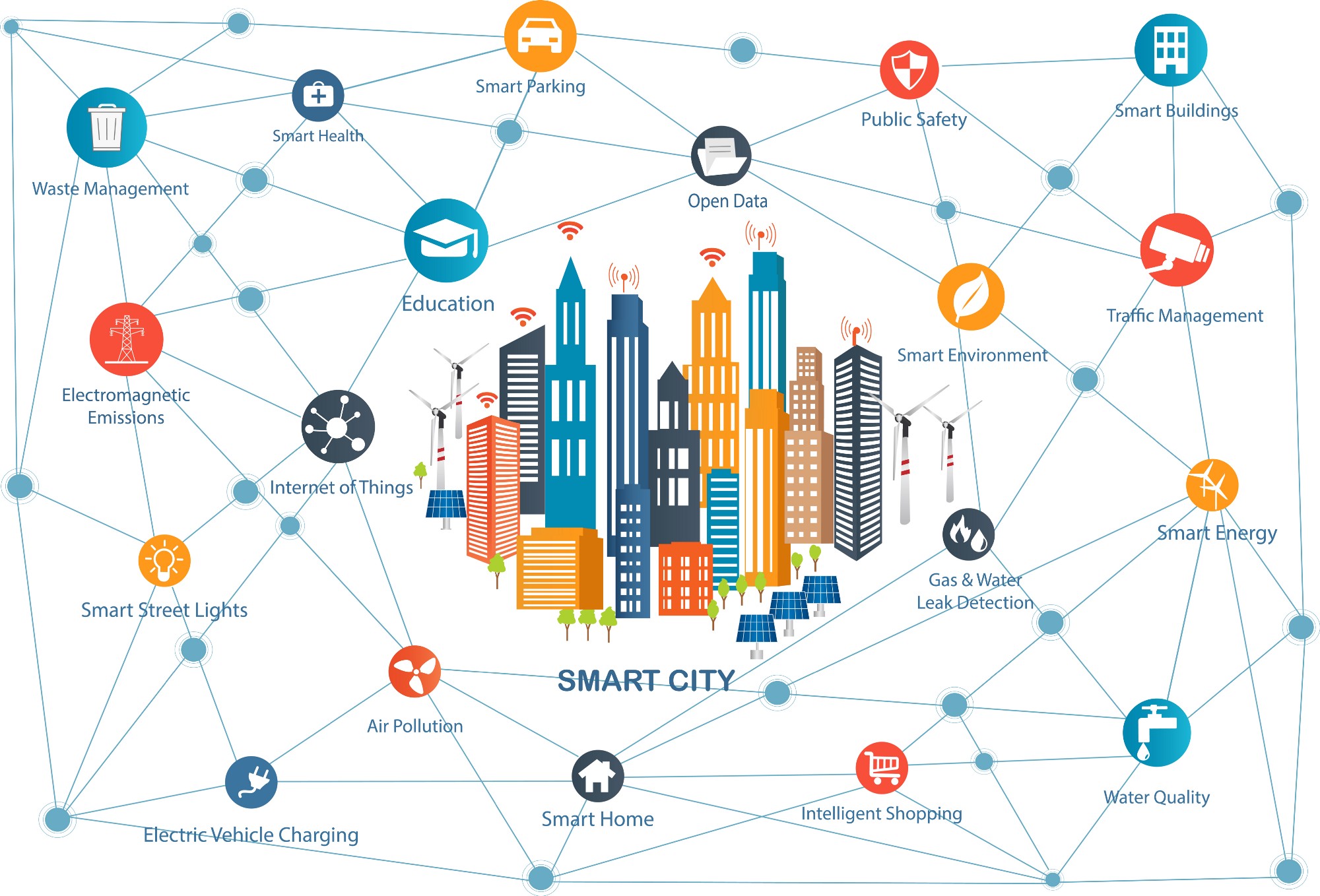

Why Smart Cities need solid cloud computing foundation

A city is not just as smart as its people, but also as much as its computing prowess and in that department cloud is the over-arching technology with super computer-like proficiency. The First-ever IDC Smart Cities Spending Guide released in March this year predicted that spending will accelerate over the 2016-2021 forecast period, reaching $45.3 billion in 2021. In Asia-Pacific (except Japan) alone, the Smart Cities will consume $28.3 billion in 2018 alone! With such investment-heavy projects in the offing, use of the best technology in business is only just organic.

About Smart Cities

To have sustainable economic development and improve the quality of life of citizens, countries all over the world are proceeding towards establishing ‘smart cities’. Now, any city cannot be termed smart if it has certain amount of automation incorporated in its system. A city can be called 'smart' if this economic development and high quality of life is achieved along with organized conservation of natural resources as well as with participation from its citizens. The investments in human capital and technology should produce a smart city that include networking applications, data management and optimization, sensors, software and real-time information analytics that can transform the urban environment and address specific needs.

Thus, use of ICT solutions is inevitable. These can help in resolving urban problems and monitor their functions. While new technology and innovation are the need of the hour in any Smart City solutions, there is also the need of great capacity.

Cloud Technology

A common string that connects the entire smart city is data and not just some but terabytes and petabytes of data that gadgets connected to people, buildings and transportation among other things create. Without a strategic approach to control this data smart cities cannot deliver on their promise.

Handling such huge quantity of heterogeneous data requires high storage capacity and computing power. For this, the continually developing cloud computing scenario coupled with Internet of Things needs to be widely deployed in cities. The cloud will facilitate storage, integration, processing and analysis of this big data in low time frames.

Currently, many citizen services involving IT are delivered by domain-specific vendors through tightly coupled systems. Services like transportation or health care have domain-specific application requirements leading to isolated systems with firmly joined infrastructure and application logic. Scalability is a challenge in these systems, and the creation of new services is hampered due to closed relationships between stakeholders. Cloud solutions can reduce such intergovernmental silos wherein different departments have no idea or understanding of what other departments are doing. Such domain-independent, cloud-based service-delivery platform for smart cities delivers an open and scalable platform and encourages collaboration between stakeholders in both IoT and clouds.

Cloud solutions can also support both public and private deployment models. In the former, the stakeholders can keep their applications on a public cloud while in the latter, they can deploy services on equipment at their own sites and feel the maximum potential of privacy and security requirements.

The rise of IoT has given birth to thousands of interconnected devices that can be exploited for mischievous purposes posing demonstrable security risks that clouds can aptly eliminate.

Clouds also help in extracting maximum value from the data received since not all data needs to go to the same applications all the time, but the right data needs to get to the correct applications at the right time. Clouds can help in managing data across all environments including edge, data center, private, public, hybrid clouds and the billions of connected devices being added to the network.

Thus, a well-built cloud platform can offer a cohesive, single-window view with quick scalability that enables quicker processing of the data at the source for faster and more accurate results.

Well, we reserved the biggest benefit for the ending note! Clouds can enable operations cost reduction in Smart Cities. A city is not a smart one until it utilizes its resources optimally and that also includes capital.

About US:

A city is not a smart one until it utilizes its resources optimally and that also includes capital. For more information, Visit us at: smart city architecture AND smart cities in India

The Digital Banking Benefits You Need to Know About

Many of us cannot imagine a life without online banking. Transfer of funds, checking of accounts, making payments, everything can now be done with a few clicks on the computer or the phone. Besides these benefits, digital banking offers a lot more to the public who want to open their eyes and see it. It is also crossing a lot of barriers to bring about real change in the world of banks. Recent advances in the banking and financial sector like blockchain, IoT, eKYC and artificial intelligence are all digitally-inclined. While banks, small and big, all over the world take the automated push, the above mentioned modern modes are all set to change the way we look at banking today. Here’s a peak in to what advantages does digital or online banking offer:

1. Easy-Peasy: Though Digital Banking has not entirely replaced the brick and mortar banks; it is definitely the more preferred option. Long queues have been cut and reasons to visit a bank in physical have reduced to almost none. Everything from opening and account to managing savings can be done online. Banks too are adding more and more services on their web portals that are refurbished as many times as needed. A virtual account in which you can actually view your monetary arrangements clearly has helped people have a more organized financial life.

Apart from this 24x7 online banking services is another added advantage for users who feel more in control of their accounts and finances.

2. Mobile Banking: Today most banks have their own mobile apps that provide all the advantages of online banking on the phone. The evolution from text alerts and phone banking to app banking services has been an important one. It is quicker and more convenient. Check up on your account when you are out shopping or conduct speedy real-time transfers when you are making a purchase, mobile banking is definitely making online banking easier.

3. Money Applications: After demonetization in India, money applications like Pay TM and TEZ have gained supreme popularity. These apps can automatically sync with one’s online banking information and helps in adhering to targeted budgets while shopping or purchasing. Many of these apps work on both the computer and mobile device for higher degree of information when one the go. E-statements are also important to be prevented from overdrawing one’s account.

4. Security: While online transactions are always marred with a certain sense of insecurity, experts believe that if one is careful there is no safer or private system. Firstly, clear cookies after any banking session at a public computer. Create long and complicated passwords that cannot be hacked. Don’t ever share your online account information with anybody. Always keep a track of your credit report. Prevent yourself from identity theft with these measures.

5. Cost-effective: For banks, digital banking has lowered the operating costs by eliminating back-office processing operations, fewer mistakes and fewer branch visits needed to less staff requirements. Yes, going digital does mean investing in a reliable and scalable IT infrastructure but it also means independence from legacy systems that often stall a bank’s progress. Apparently, banks are worried about investing in for a change but smarter businessmen always know that not investing in a change might prove to be costlier later.

These benefits of insignificant infrastructure and overhead costs helps banks to better serve their customers by lowering interest rates on savings and loans. No minimum balance accounts and no service fees is a result of such automated banking.

Meanwhile, digitalization in banking has already put a stop on several malpractices like circulation of counterfeit notes which has been a major threat to the economy since many years. Also, taking customer feedback has become easy now enabling the banks to give better services. Digital banking also means more digital data and this means that big data analysis can be carried out by banks to analyze data accurately. This will eventually lead to better decision making, more cost-effective solutions, and improved customer experience.

About US:

Digital banking also means more digital data and this means that big data analysis, for more information visit us at: Digital Banking AND mobile banking solution

Cloud metering and billing exactly how you want it!

Background

Cloud computing is not an option anymore, rather it is the standard for businesses to run their applications. Cloud computing helps orchestrate IT infrastructure and provide IT services as a commodity on a services based model. For businesses either renting these services or owning them privately, it is implied that the Cloud Service Providers provide insights with respect to resource utilization and metering for capital management and auditing.

Each cloud service provider has his own way of deploying resources and metering them, and that differs from the traditional IT business model, from procuring resources to providing them for deploying services. Improved IT infrastructure management, granularity in resource metering and ability to determine expenditure per service, changes the capital expenditure model to an operational expenditure model.

CIOs who know where their money comes from are in better control of their finances. Charge-back or show back can help to engage the business in IT spending and value, but the effort must be worthwhile. Some CIOs simply want to stop the business from consuming more and more IT while blaming them for the cost and asking them to make it cost less.

Well-implemented charge-back can make the relationship between spending and revenue more transparent and intuitive. This reduces the need for expensive governance committee meetings and management interventions, freeing the organization to focus on optimizing all business spending.

Charge-back is often a source of contention between IT executives and business leaders, but it need not be. CIOs can use charge back to transform their team's relationships with business stakeholders, improve financial transparency, and gain additional funding.

As a cost-center, IT budget always comes from charge-back against the organization's business revenue, even in cases where the IT organization does not directly charge back for IT services.

Organizations that lack financial transparency in their service delivery are vulnerable to time consuming audits and unbudgeted tax invoices.

Public cloud service providers, handle the overhead of managing IT hardware infrastructure while organizations can focus on their core business functionality. In private cloud set up owned by organizations, the entire stack is managed by the owner or outsourced to third party service integrators. In both cases regular insights on resource metering with respect to cost is required for planning and correct strategic decisions.

Efficient IT infrastructure management is incomplete without aligning IT resources with cost. It is also essential to map the consumption of these resources per user in order to determine efficiency and profitability. Gathering data and generating insights is necessary for continuous improvement and getting maximum returns on investments.

Multi-Billing in eNlight Cloud Platform

eNlight Cloud Platform goes out of the box with cloud metering and billing. Being a leader in cloud orchestration software, the platform provides IT infrastructure management, enables application deployment on virtualized resources, multi-tenant operations and multi or flexible billing models.

At the base level eNlight Cloud Platform provides virtual machine resources metering. Real time processor, memory, disk and bandwidth utilization is provided for static as well as dynamically auto-scalable virtual machines. These resources can be directly mapped with per unit utilization and that provides statistics with respect to monetary utilization of resources.

eNlight platform’s multi or flexible billing module combined with multi-tenant architecture, enables businesses gather monetary resource consumption statistics at a business unit, department and individual user level. The cloud platform provides multiple billing models that match almost all business models like:

- Dynamic Pay-Per-Consume

- Fixed Pay-Per-Use

- Service-Based Billing

Dynamic Pay-Per-Consume Billing

Charging resources based upon consumption against allocation is Dynamic Pay-Per-Consume billing. Dynamic Pay-Per-Consume billing leverages eNlight Cloud Platform’s Auto scaling technology to provide charge back mechanism for IT resources based upon consumption rather than allocated resources.

eNlight enables users to deploy auto-scalable virtual machines that scale dynamically as per resource requirement. Compute resources are allocated and deallocated from the virtual machine in real-time. Due to auto scaling virtual machines can run at bare minimum resources and can demand resources as and when required. For example, a virtual machine can run with minimum 2 vCPU and 2GB RAM at 02.00 am and can demand 4 to 6 vCPU and 12 to 16GB RAM in the peak time at 12 pm. This leads to dynamic resource utilization, having wavy resource utilization graphs.

eNlight Cloud Platform allows billing of such dynamic resources at the granularity of minutes. Dynamic virtual machines are provisioned with min / max resource capping. These virtual machines scale between the min / max resource caps. At any point the virtual machine would be consuming resources in between that resource capping. In this case dynamic Pay-Per-Consume billing allows dynamic resource metering and charge these consumed resources based upon the per unit rates defined in eNlight cloud platform’s charge back system.

The platform’s auto scaling feature enables achievement of greater server consolidation ratio while Dynamic Pay-Per-Consume Billing enables cloud resource metering based upon consumption of these auto-scalable virtual machines.

Fixed Pay-Per-Use Billing

Charging resources based upon allocation is Pay-Per-Use Billing. Essentially it is direct billing based upon the units allocated from the pool of cloud resources. As opposed to Dynamic Pay-Per-Consume billing, Fixed Pay-Per-Use billing charges resources based upon their allocation. This is the conventional billing model that the entire cloud market implements.

In eNlight Cloud Platform, a virtual machine with fixed resources can be provisioned which are known as Static Virtual Machines. For example, a VM with 8 vCPU and 12 GB RAM. The resource consumption of static virtual machine equals to the allocated resources. This leads to fixed resource utilization, where the resources can be charged on fixed flat rates.

Services-Based Billing

Charging tenants based upon service deployment is Service Based Billing.

In eNlight Cloud Platform, a service can be deployed in the form of group of related resources. For example, a mail service which consists of email server and backup servers; this group of resources can be charged flat based upon the charges and policies defined in eNlight Cloud Portal’s system.

Service-Based Billing is different from Pay-Per-Consume and Pay-Per-Use in a sense that it enables to set flat rates and charge group of services based upon these rates defined in the system. This flat charge-back model allows to group application deployments and resources under one common financial entity and simplify billing of related resources.

Conclusion

Cloud is de facto approach to deploy services and manage IT infrastructure, and having a clear view of resource metering from a financial perspective is critical. eNlight Cloud Platform provides multiple options a charge back models that suits almost all business requirements. Service Based Billing enables charging group of resources with fixed flat rates across different services deployed across departments or business units.

eNlight provides highly granular resource utilization metering which can be charged using Pay-Per-Consume and Pay-Per-Use billing models. Pay-Per-Use billing provides more control and better granularity in terms of charging IT resources. These resources are charged against static utilization while different rates per unit can be configured in the system. Dynamic Pay-Per-Consume billing model is exclusive to the platform which leverages eNlight’s patented auto-scaling technology to provide charge back mechanism for dynamically scaled resources in real time.

With eNlight Cloud Platform’s, multi-billing combined with multi-tenant architecture CxO’s can experience the next generation IT resource management from a single cloud management portal.

Audit Your Web Security with MTvScan Vulnerability Scanner